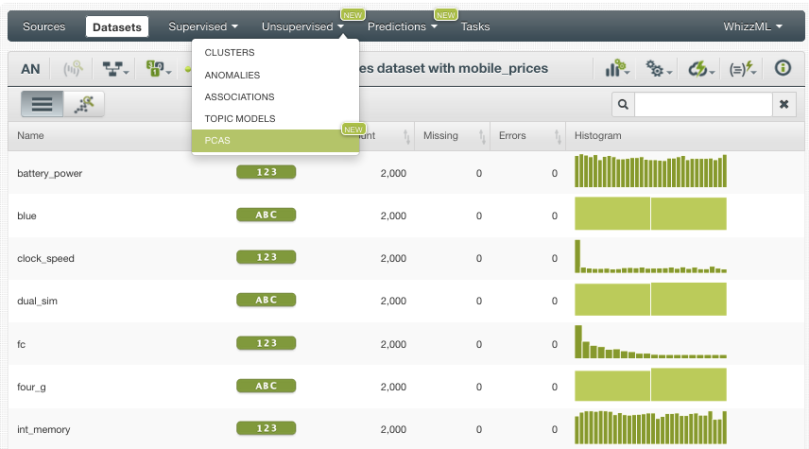

BigML’s upcoming release on Thursday, December 20, 2018, will be presenting our latest resource to the platform: Principal Component Analysis (PCA). In this post, we’ll do a quick introduction to PCA before we move on to the remainder of our series of 6 blog posts (including this one) to give you a detailed perspective of what’s behind the new capabilities. Today’s post explains the basic concepts that will be followed by an example use case. Then, there will be three more blog posts focused on how to use PCA through the BigML Dashboard, API, and WhizzML for automation. Finally, we will complete this series of posts with a technical view of how PCAs work behind the scenes.

Understanding Principal Component Analysis

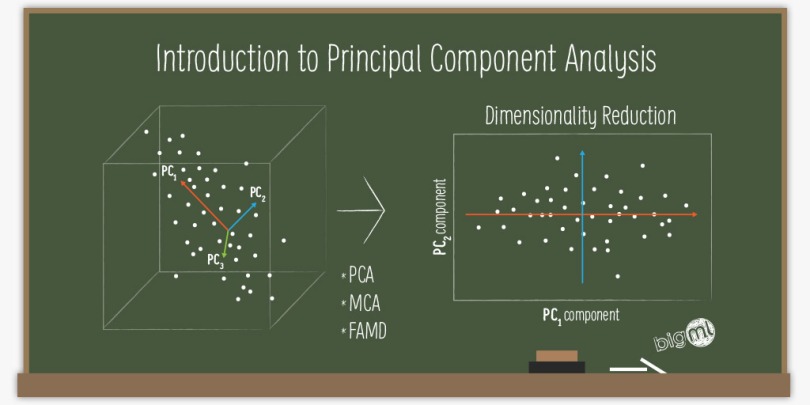

Many datasets in fields as varied as bioinformatics, quantitative finance, portfolio analysis or signal processing can contain an extremely large number of variables, that may be highly correlated, resulting in sub-optimal Machine Learning performance. Principal component analysis (PCA) is one technique that can be used to transform such a dataset in order to obtain uncorrelated features or as a first step in dimensionality reduction.

Because PCA transforms the variables in a dataset without accounting for a target variable, it can be considered an unsupervised Machine Learning method suitable for exploratory data analysis of complex datasets. However, when used towards dimensionality reduction, it also helps reduce supervised model overfitting, as there remain fewer relationships to consider between variables after the process. To do this, the principal components yielded by a PCA transformation are typically ordered by the amount of variance each explains in the original dataset. The practitioner can decide how many of the new component features can be eliminated from a dataset while preserving most of the original information contained in it.

Even though they are all grouped under the same umbrella term (PCA), under the hood, BigML’s implementation incorporates multiple factor analysis techniques, rather than only the standard PCA implementation. Specifically,

- Principal Component Analysis (PCA): BigML utilizes this option if the input dataset contains only numerical data.

- Multiple Correspondence Analysis (MCA): this option is available if the input dataset contains only categorical data.

- Factorial Analysis of Mixed Data (FAMD): in case the input dataset contains both numeric and categorical fields this option is also available.

In the case of items and text fields, data is processed using a bag-of-words approach allowing PCA to be applied. Because of this nuanced approach, BigML can handle categorical, text, and items fields in addition to numerical data in an automatic fashion that does not require manual intervention by the end user.

Want to know more about PCA?

If you would like to learn more about Principal Component Analysis and see it in action on the BigML Dashboard, please reserve your spot for our upcoming release webinar on Thursday, December 20, 2018. Attendance is FREE of charge, but space is limited so register soon!