In my last post, I started discussing Pedro Domingos’ excellent paper reviewing some of the underpinnings and pitfalls of machine learning, making the paper a little less academic and adding a few examples. I’ll continue that review in this post. Many of the headings come directly from the paper, and I’ll use quote marks when quoting directly.

Finally, I’m using “feature” and “field” here interchangeably, as Domingos uses the former term and BigML generally uses the latter.

Feature Engineering is the Key

We’ll start out this time with a topic that is so important that it deserves an instructive example. In fact, Domingos calls it “easily the most important factor” in determining the success of a machine learning project, and I agree with him.

Suppose you have a dataset in which you have pairs of cities, coupled with a prediction of whether most people would consider the two cities to be comfortably drivable within a single day. You’ve got a nice database with the longitudes and latitudes for all of your cities, and so these are your input fields. Your dataset might look something like this (note that the values don’t correspond to actual city locations – they are random):

| City 1 Lat. | City 1 Lng. | City 2 Lat. | City 2 Lng. | Drivable? |

|---|---|---|---|---|

| 123.24 | 46.71 | 121.33 | 47.34 | Yes |

| 123.24 | 56.91 | 121.33 | 55.23 | Yes |

| 123.24 | 46.71 | 121.33 | 55.34 | No |

| 123.24 | 46.71 | 130.99 | 47.34 | No |

And you expect to construct a model that can predict for any two cities whether the distance is drivable or not.

Probably not going to happen.

The problem here is that no single input field, or even any single pair of fields, is closely correlated with the objective. It is a combination of all four fields (the distance from one pair of geo-coordinates to the other), and a combination by a fairly complex formula, that is correlated with the input. Machine learning algorithms are limited in the way they can combine input fields; if they weren’t, they could totally exhaust themselves trying everything.

But all is not lost! Even if the machine doesn’t have any knowledge about how longitudes and latitudes work, you do. So why don’t you do it? Apply the formula to each instance and get a dataset like this (again, random values):

| Distance (mi.) | Drivable? |

|---|---|

| 14 | Yes |

| 28 | Yes |

| 705 | No |

| 2432 | No |

Ah. Much more manageable. This is what we mean by feature engineering. It’s when you use your knowledge about the data to create fields that make machine learning algorithms work better.

Domingos mentions that feature engineering is where “most of the effort in a machine learning project goes”. I couldn’t agree more. In my career, I would say an average of 70% of the project’s time goes into feature engineering, 20% goes towards figuring out what comprises a proper and comprehensive evaluation of the algorithm, and only 10% goes into algorithm selection and tuning.

How does one engineer a good feature? One good rule of thumb is to try to design features where the likelihood of a certain class goes up monotonically with the value of the field. So in our example above, “drivable = no” is more likely as distance increases, but that’s not true of longitude or latitude. You probably won’t be able to engineer a feature where this is strictly true, but it is a good feature even if it is somewhat close to that ideal.

Typically, there isn’t a single data transformation that makes learning immediately easy (as there was in the above example), but at least as typically there are one or more things you can do to the data to make machine learning easier. There’s no formula for this, and a lot of it happens by itch and by twitch. BigML attempts to do some of the easy ones for you (automated date parsing is an example) but far more interesting transformations can happen with detailed knowledge of your specific data. Great things happen in machine learning when human and machine work together, combining a person’s knowledge of how to create relevant features from the data with the machine’s talent for optimization.

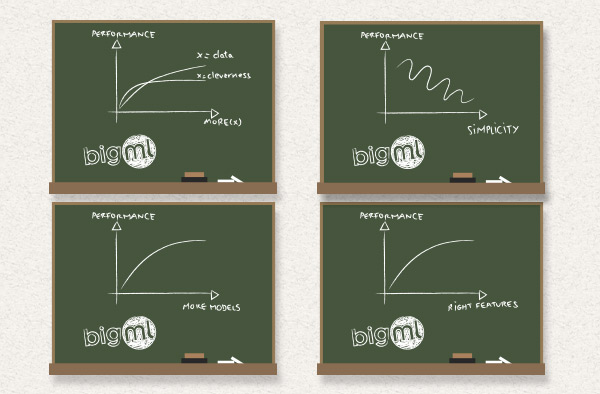

More Data Beats A Cleverer Algorithm

More data wins. It’s not just Domingos saying this; there’s increasingly good evidence that, in a lot of problems, very simple machine learning techniques can be levered into incredibly powerful classifiers with the addition of loads of data.

A big reason for this is because, once you’ve defined your input fields, there’s only so much analytic gymnastics you can do. Computer algorithms trying to learn models have only a relatively few tricks they can do efficiently, and many of them are not so very different. Thus, as we have said before, performance differences between algorithms are typically not large. Thus, if you want better classifiers, you should spend your time:

- Engineering better features

- Getting your hands on more high-quality data

Learn Many Models, Not Just One

Here, Domingos discusses the power of ensembles, which we’ve blogged about before. There’s no need to discuss it at greater length here, but it bears repeating in brief: One can often make a more powerful model by learning multiple classifiers over different random subsets of the data. The downside is that some interpretability is lost; instead of a single sequence of questions and answers to arrive at a prediction, you now have a sequence for each model, and the models vote for the final prediction. However, if your application is very performance sensitive, the loss in interpretability might be worth the increase in power.

Simplicity Does Not Imply Accuracy

There is an old saying in hypothesis testing known as Occam’s Razor. In the original fancy Latin it reads something like, “Plurality is not to be posited without necessity”. In layman’s terms, if you have two explanations for something, you should generally prefer the simpler one. For example, if you wake up in the middle of a cornfield having no memory of what you did the previous night, one explanation is that you were abducted by aliens and they implanted a memory suppression device in your brain. Another is that you got really drunk. The latter is simpler and therefore preferred (unless you are a member of the fringe media).

So too in machine learning. If we have two models that fit the data equally well, many machine learning algorithms have a way of mathematically preferring the simpler of the two. The folk wisdom here is that a simpler model will perform better on out-of-sample testing data, because it has less parameters to fit, and thus is less likely to be overfit (see part one for more on the dangers of overfitting).

One should not take this rule too far. There are many places in machine learning where additional complexity can benefit performance. On top of that, it is not quite accurate to say that model complexity leads to overfitting. More accurate is that the procedure used to fit all that complexity leads to overfitting if it is not very clever. But there are plenty of cases where the complexity is brought to heel by cleverness in the model fitting process.

Thus, prefer simple models because they are smaller, faster to fit, and more interpretable, but not necessarily because they will lead to better performance; the only way to know that is to evaluate your model on test data.

Representable Does Not Imply Learnable

The creators of many machine learning algorithms are fond of saying that the function representing an accurate prediction on your data is representable by the learning algorithm. This means that it is possible for the algorithm to build a good model on your data.

Unfortunately, this possibility is rarely comforting by itself. Building a good model may require much more data than you have, or the good model might simply never be found by the algorithm. Just because there’s a good model out there that the algorithm could find does not mean that it will find it.

This is another great argument for feature engineering: If the algorithm can’t find a good model, but you are pretty sure that a good model exists, try engineering features that will make that model a little more obvious to the algorithm.

Correlation Does Not Imply Causation

This is such an old adage in statistics that Domingos almost decides not to mention it, but it is so important that he does.

The point of this common saying is that modeling observational data can only show us that two variables are related, but it cannot tell us the “why”. In a soon-to-be-classic example from the excellent book Freakonomics, data from public school test scores showed that children who lived in homes with a high number of books tended to have higher standardized test scores than those with a lower number of books in the house. The mayor of Chicago, doubtless while screaming “Science!” at all of his advisors, proposed a program to send free books to the homes of children, which would definitely raise their test scores, right?

It turns out, of course, that intelligence doesn’t really work like that. More likely, the relationship exists because smarter and more affluent parents tend to buy books and also do a whole bunch of other helpful things for their children. The books don’t cause the kids to be successful, they are just good indicators of success.

You should take similar care when interpreting your models. Just because one thing predicts another doesn’t mean it causes another, and making business (or public policy) decisions based on some imagined causal relationship should be done with extreme caution.

The Big Picture

Machine learning is an awfully powerful tool, and like any powerful tool, misuses of it can cause a lot of damage. Understanding how machine learning works and some of the potential pitfalls can go a long way towards keeping you out of trouble.

An overall attitude that I find helpful is one of skeptical optimism: If you think you have a good model, try first to find all of the ways you think it might be broken, paying special attention to problems caused by overfitting or too many features.

If your model isn’t performing well, don’t lose heart! With a combination of feature engineering and gathering more data, the path to a better model is sometimes shorter than you think.

10 comments